Infinite Canvas: An Artistic Exploration with AI

# What even is this?

The collaborative infinite canvas is an AI-generated image that extends endlessly in all directions. All changes you make are visible to everyone else in real time. The idea behind it is simple: move to the edge of the image, and you'll find a button that lets you generate a new AI image that connects with what's already there.

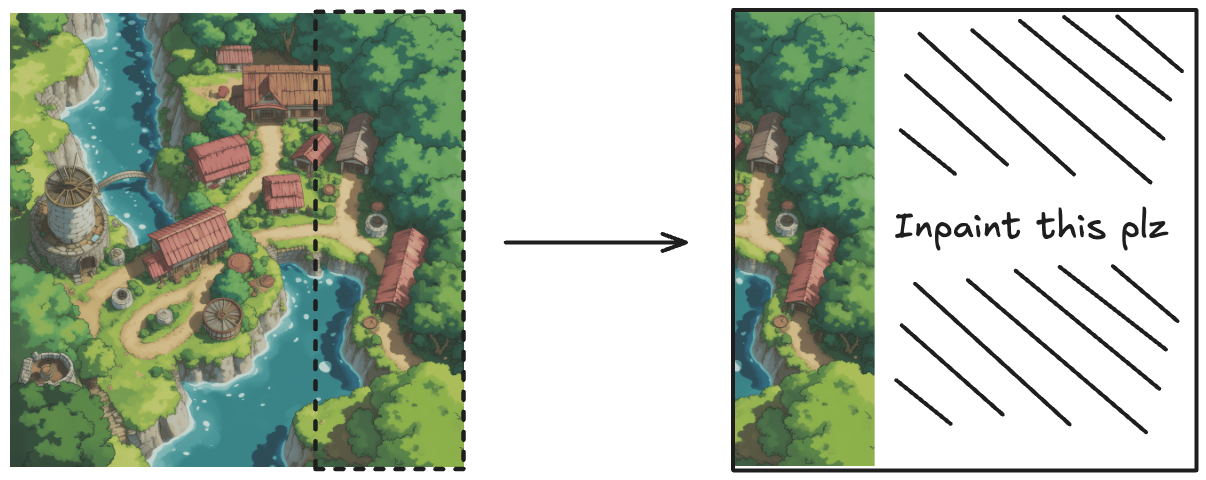

What interests me most about this process is observing how each newly generated image gradually drifts from the original starting point. With every extension, subtle differences accumulate, resulting in what I've been calling digital dementia1.

This blog post is almost as important as the canvas itself, because I really wanted to share how it works, and the tricks I used to make it happen.

Before you continue, you should decide whether you want to read the technical details or not. The nerd mode includes some extra stuff that are not present in the normal mode.

# How it works

There will be more in-depth technical details on how I made it work at all with the small infra budget I had (see section: Loading an "infinite" image), but here I'll focus on the AI side. It comes down to three parts: AI inpainting, the grid system, and biomes.

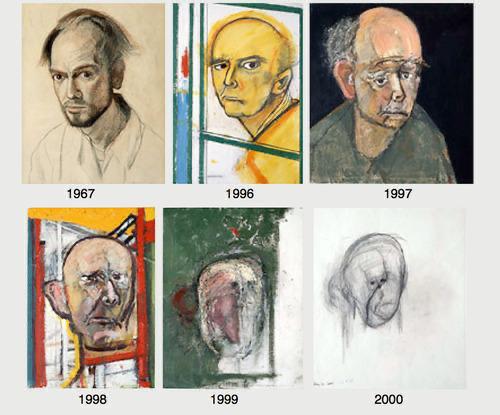

## AI Inpainting

AI Inpainting is at the heart of this project, it's what enables each image to blend into the next one. The core idea is simple: the AI takes the edge of an existing image and generates the next part, creating visual continuity. Let's walk through an example: There are three key components involved: the image to inpaint, the mask, and the prompt. The mask indicates to the AI model which areas to preserve and which to regenerate (white areas are retained, black areas are regenerated). Together with a descriptive prompt, which guides the AI in determining what to generate, the final seamless image is produced.

There are three key components involved: the image to inpaint, the mask, and the prompt. The mask indicates to the AI model which areas to preserve and which to regenerate (white areas are retained, black areas are regenerated). Together with a descriptive prompt, which guides the AI in determining what to generate, the final seamless image is produced.

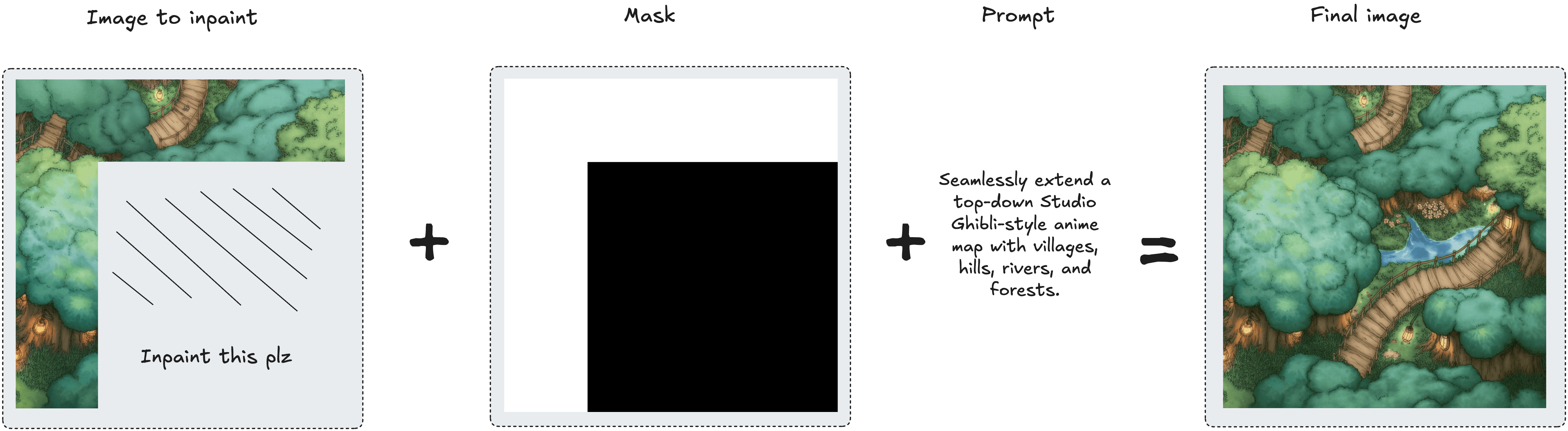

## The Grid system

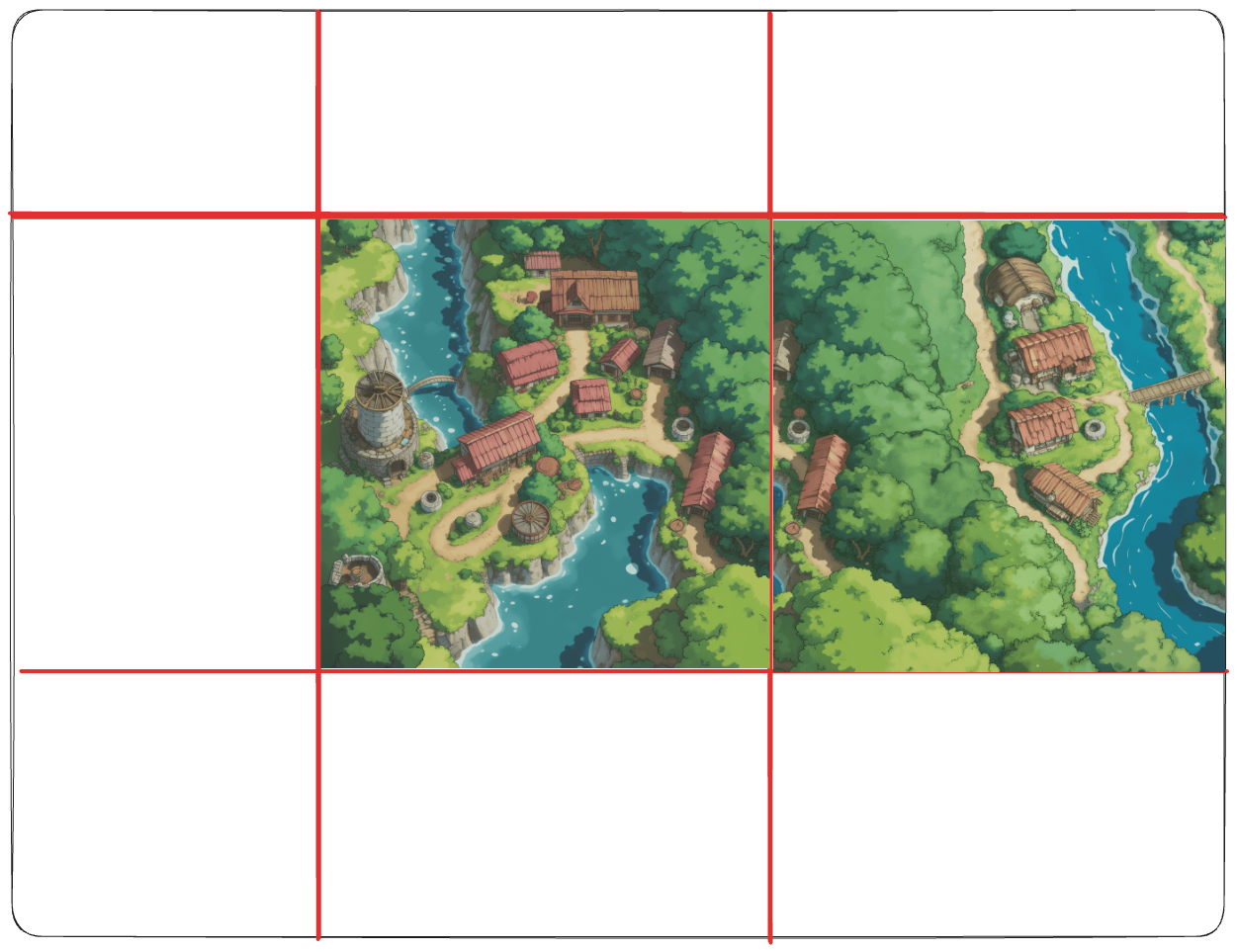

This infinite canvas is composed of a grid of cells that may or may not (yet) have an image associated with it. Let's start with the simplest case: In this sketch, we see a grid with a single image on it. Let's say we want to generate the one immediately to the right. In order to do that, we should slice the right part of the image and pass it to the AI model in order to inpaint it.

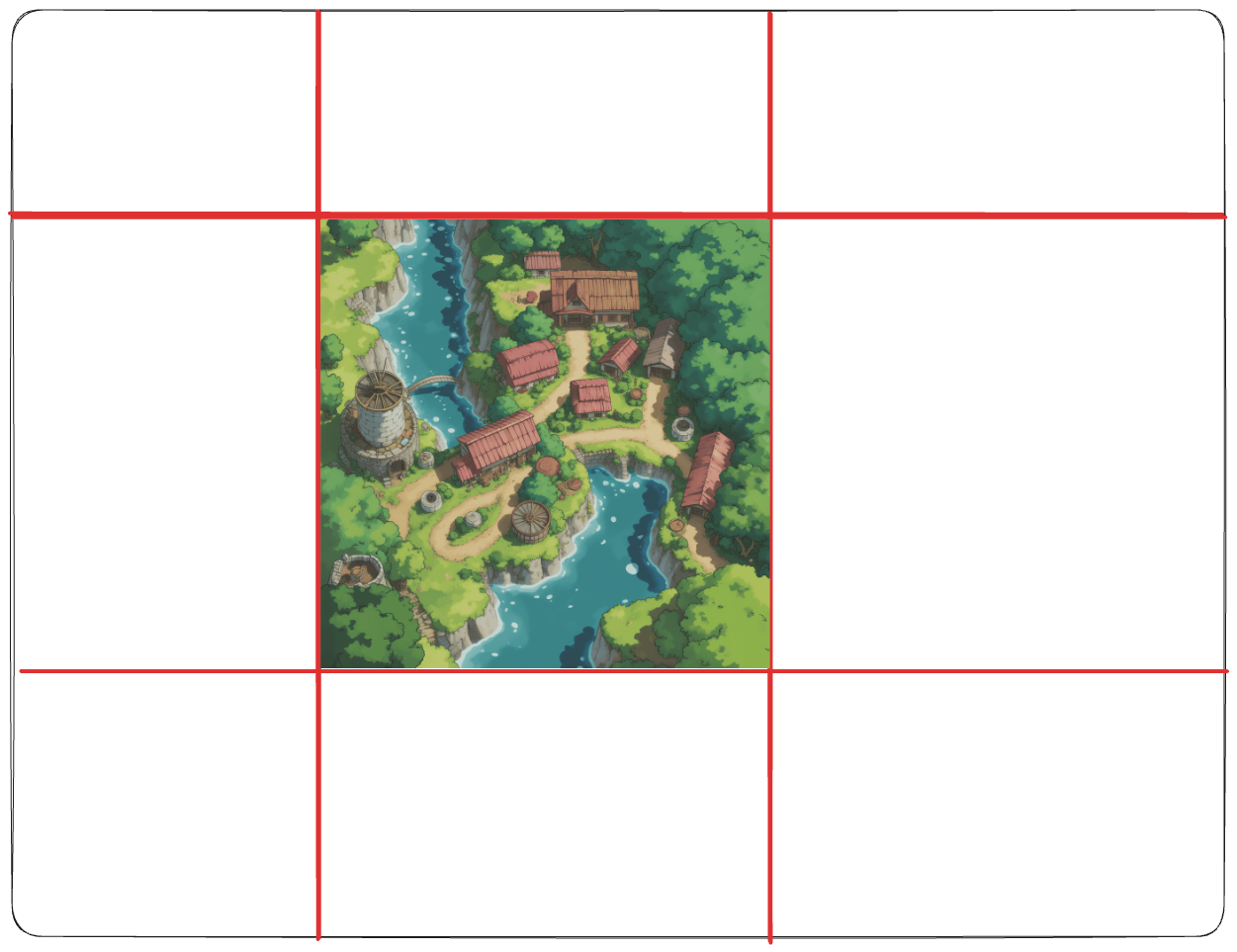

In this sketch, we see a grid with a single image on it. Let's say we want to generate the one immediately to the right. In order to do that, we should slice the right part of the image and pass it to the AI model in order to inpaint it. To my surprise, the model does this part really well, but the problem is that if we were to slap it directly to the right, it wouldn't look right:

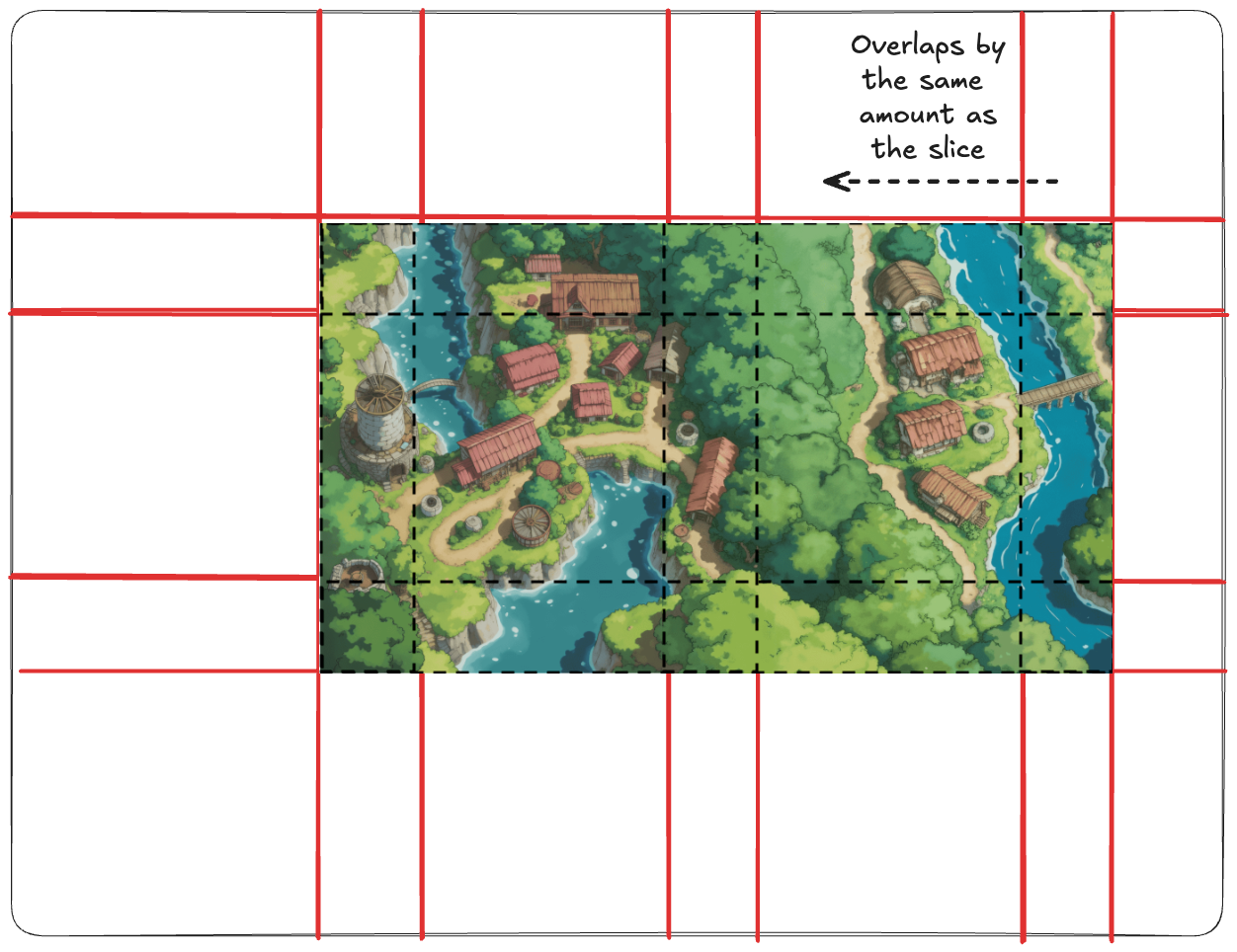

To my surprise, the model does this part really well, but the problem is that if we were to slap it directly to the right, it wouldn't look right: There is no continuation between the images; we should overlap them by the same amount as the slice.

There is no continuation between the images; we should overlap them by the same amount as the slice. If we do this precisely, in all directions, we achieve our goal! This involved a bit of tricky JavaScript, but it worked perfectly. And yes, infinite was in cursive because, in reality, it's not really infinite, but more on that in the next section.

If we do this precisely, in all directions, we achieve our goal! This involved a bit of tricky JavaScript, but it worked perfectly. And yes, infinite was in cursive because, in reality, it's not really infinite, but more on that in the next section.

## Biomes and Features

My initial idea was to have a single prompt for all generated images and call it a day, but then I decided to summon the one and only Dani Torramilans and decided to go full-on into the rabbit hole. Dani did a Minecraft clone when he was like 17 and once had told me how the terrain generation algorithm worked. He saw it clear as water; we had to do the same for this project.

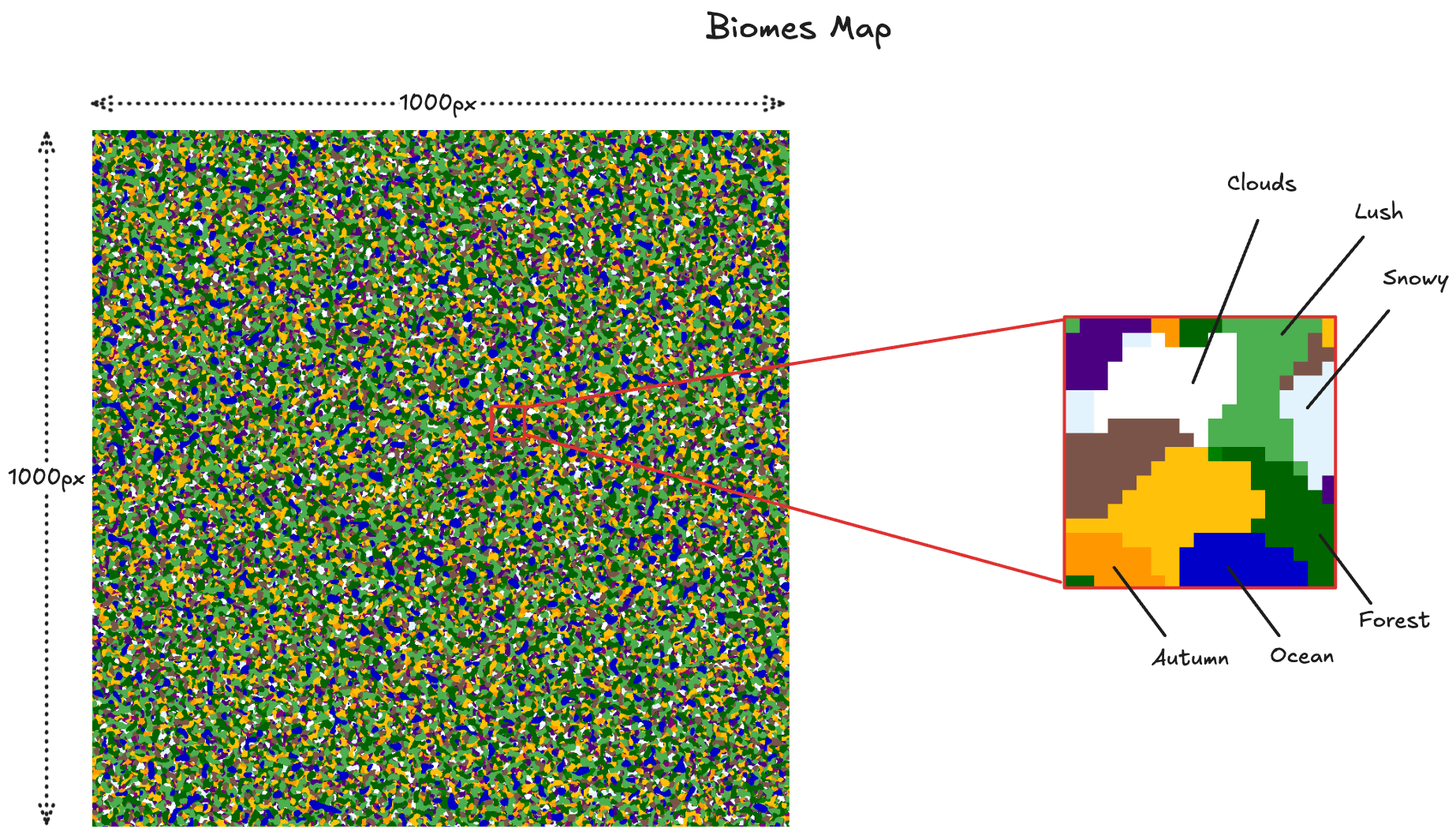

He created a Python script that generated a 1000x1000 image from Simplex noise. Each pixel of this image corresponds to one Biome in the canvas. When generating a new image, the system examines the color at a specific position, with the center at 0,0 (e.g., pixel coordinates -5, 23). Each color corresponds to a particular biome, which maps to a prompt. For instance, red corresponds to a volcanic landscape, while blue corresponds to an ocean, and so on.

When generating a new image, the system examines the color at a specific position, with the center at 0,0 (e.g., pixel coordinates -5, 23). Each color corresponds to a particular biome, which maps to a prompt. For instance, red corresponds to a volcanic landscape, while blue corresponds to an ocean, and so on.

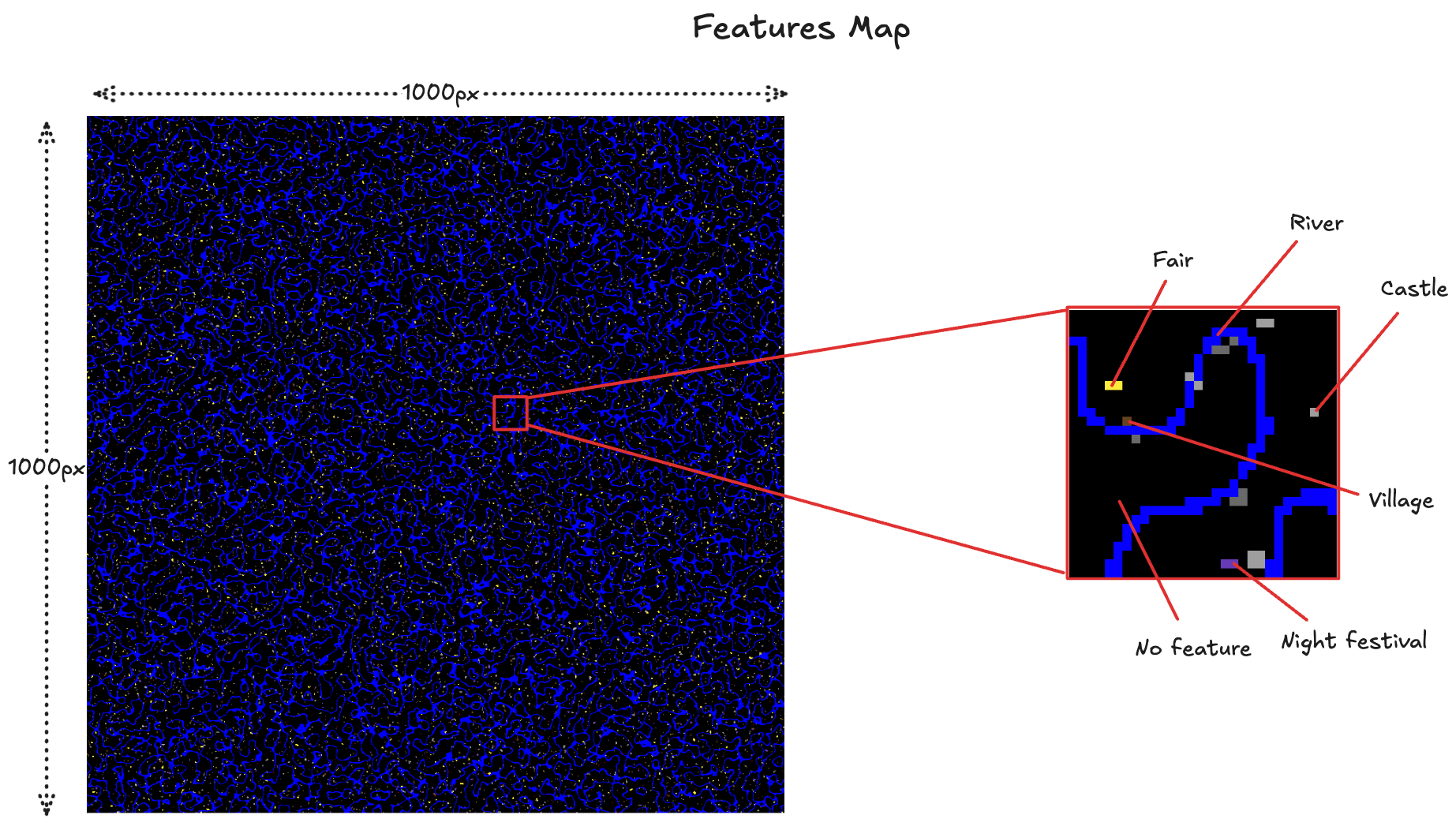

For added dynamism, a features map comes to play. It's the same mechanism but instead maps to the features prompts, for example: gray maps to a castle, blue to a river, purple to a night festival, black is no feature. With this trick, we get all permutations of biomes and features for free. Finally, a compound prompt is made such as:

With this trick, we get all permutations of biomes and features for free. Finally, a compound prompt is made such as:

let prompt = `Extend the existing image as world set in ${biome_prompt}...`;

if (feature_prompt) {

prompt = `${prompt}. This part of the world has a prominent feature: ${feature_prompt}`;

}A final prompt may look something like this: Extend the existing image as a world set in a mystical forest city hidden within gigantic, ancient trees. This part of the world has a prominent feature: A fantasy castle town.But… if the images are 1000x1000, doesn't that mean the canvas is only 1000x1000 too??? Technically, yeah—it's just one million cells. And BTW, generating that would cost me around $40k. But no worries. If the canvas ever even gets close to filling up, we'll just extend the maps procedurally. We could actually make it pretty much infinite.

# Loading an "infinite" image

If we tried to load all cells onto the user's browser, it would blow up pretty quickly. Not only that, but even if this project doesn't go viral, 10 users loading 1,000,000-cell grids, all pinging my backend in parallel, isn't something my $5/month Hetzner VPS could handle very easily. To get around this, I did three things: Lazy loading, a lot of caching, and updating on writes.

## Lazy Loading

For many of you, this may not come as a surprise, but all the cells in the canvas are not loaded at the same time: only the ones visible in the viewport are loaded, avoiding unnecessary work.

## A Lot of Caching

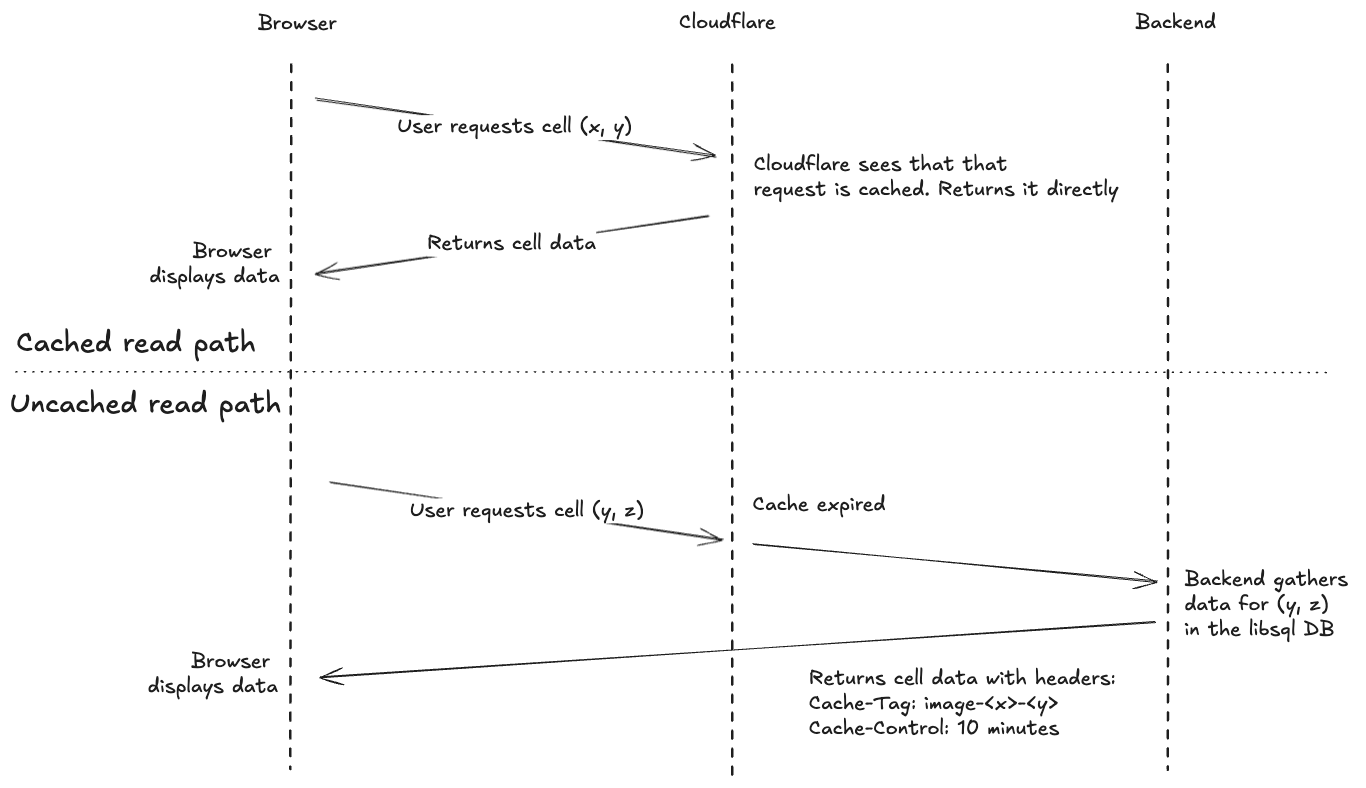

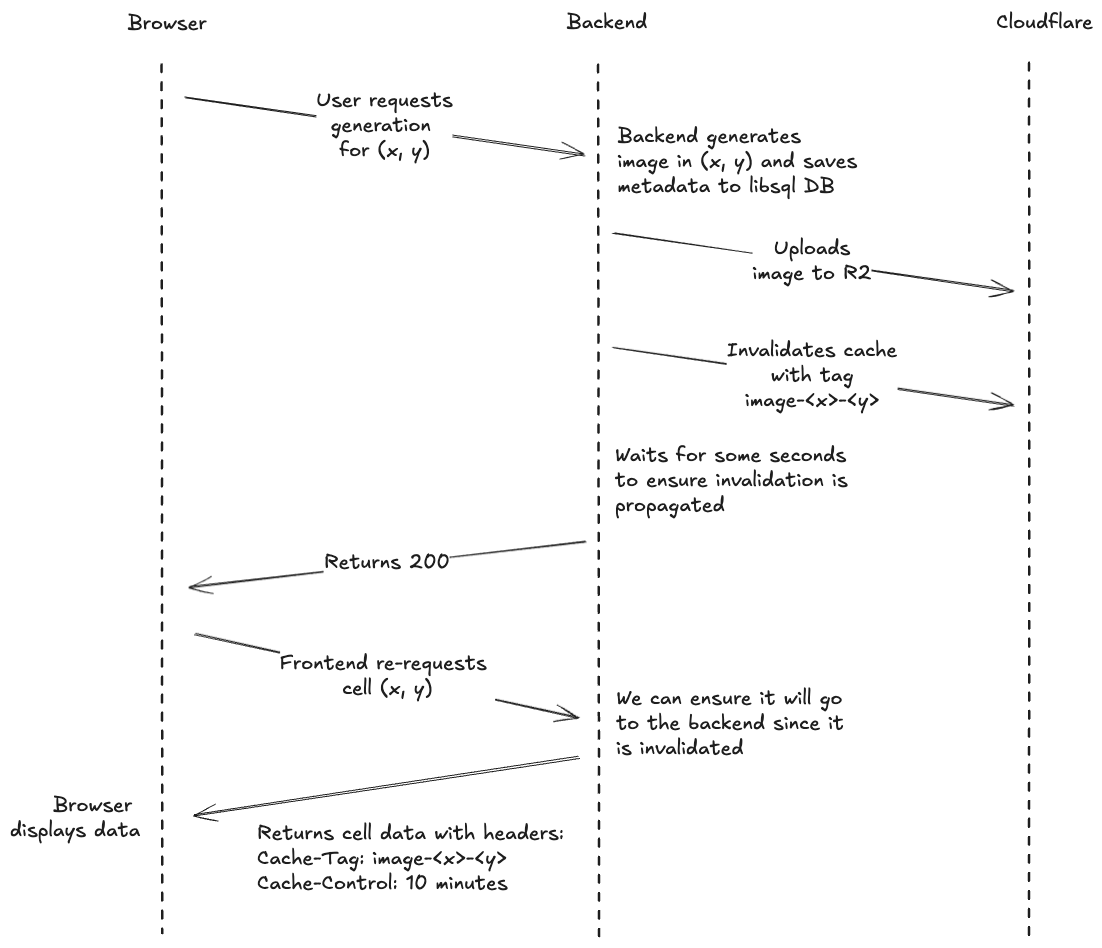

The data fetching process is relatively straightforward: Each cell has a coordinate (e.g. 3,-5). Then, the cell calls my backend to retrieve some information such as the status (if it's generating, generated, pending to be generated, or simply empty), model used, image URL, and so on. A naive approach for caching could be to just cache all these requests for 5 minutes in Cloudflare's CDN. The problem with that is it would lose a lot of the real-timeness:

- User A loads the page.

- User B generates a new image 1 second later.

- User A would have to wait for 5 minutes to see the new image.

- User generates an image.

- Frontend immediately fetches the same coordinate to gather the generation info.

- Since the cell is already cached, the old response is returned, and the cell is still painted as empty.

/generate endpoint return fresh data, but that wouldn't fix the real-timeness problem, so I decided to go the following route: The read path is as we would expect, nothing special except for the

The read path is as we would expect, nothing special except for the Cache-Tag header that the server responds with. This is like an ID for Cloudflare, so I can later invalidate it.The write path is where the magic happens: As we know that a cell is updated only when a new image is generated, we invalidate at that moment the Cache-Tag in Cloudflare, so consecutive reads will be up-to-date. The last missing piece here is that non-generated cells poll every 10 seconds, so all other online users will see the change within that timeframe, without ever hitting my backend.

The last missing piece here is that non-generated cells poll every 10 seconds, so all other online users will see the change within that timeframe, without ever hitting my backend.

Footnote: Reality is a bit more complex since images are not generated in real-time but rather added to a queue, but the principle is the same.

Second Footnote: Cloudflare's free tier is something out of this world. Everything I'm listing here is 100% free, regardless of traffic, plus there are no egress fees for R2 public buckets.

## Updating on Writes

When a user requests a cell's information, we need to determine if it's at the border of the generated area. One approach would be to check this on every read by examining if the surrounding coordinates are generated. However, with our high read-to-write ratio, this would be inefficient.

I've spoiled this a bit in the previous point, but we can take advantage of the fact that the canvas state only changes during image generation. Instead of calculating border status on every read, we update this information in the database when a new image is generated. This dramatically reduces computation overhead.

Additionally, when a new image is generated, it is saved in multiple resolutions (e.g., 1024x1024, 512x512, 256x256). When the user is zoomed out, the system loads the version closest to the required resolution, which helps reduce loading time.

# Ah, and this is expensive!

My goal with this project is to make the canvas as large as possible, but as you can probably guess, that's pretty expensive. That's why I've added a paywall—so anyone who enjoys it can help keep it going. The idea is not just to cover the costs (after Stripe's fees, it's pretty much at cost anyway), but also to build something together. Every contribution helps the canvas grow bigger and more interesting, turning this from my little experiment into something everyone can be part of. You're not just paying for pixels; you're helping create a cool, community-driven piece of art.

Gabi Ferraté1 When I first started experimenting, the gradual distortion reminded me of William Utermohlen's self-portraits—how they slowly lost clarity and detail. It felt similar to how our own memories fade and change over time, inspiring the term "digital dementia."